How to Forward Logs from a Kafka Cluster

Kafka is an open-source distributed event and stream-processing platform built to process demanding real-time data feeds. It is inherently scalable, with high throughput and availability.

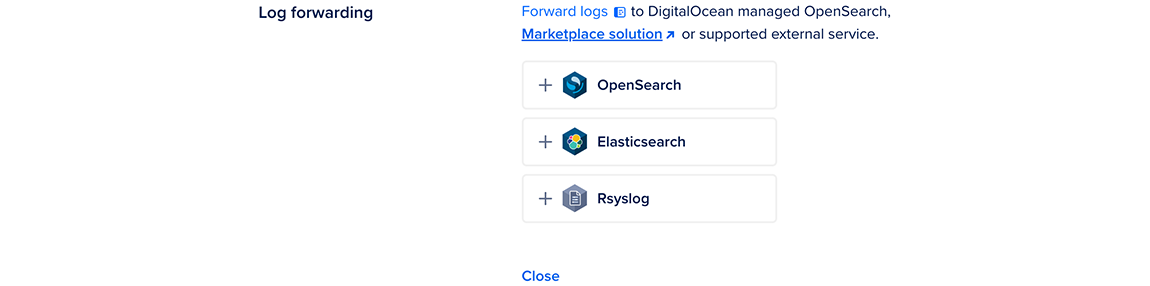

Log forwarding allows you to transmit log data from any number of sources to a centralized database cluster. You can do so by creating and managing log sinks for your database clusters via the control panel or the API. Kafka supports forwarding to OpenSearch, Elasticsearch, and Rsyslog. You can 1-Click deploy these tools to a Droplet from the Databases section of the DigitalOcean Marketplace.

Create a Log Sink Using the API

Forward Logs Using the Control Panel

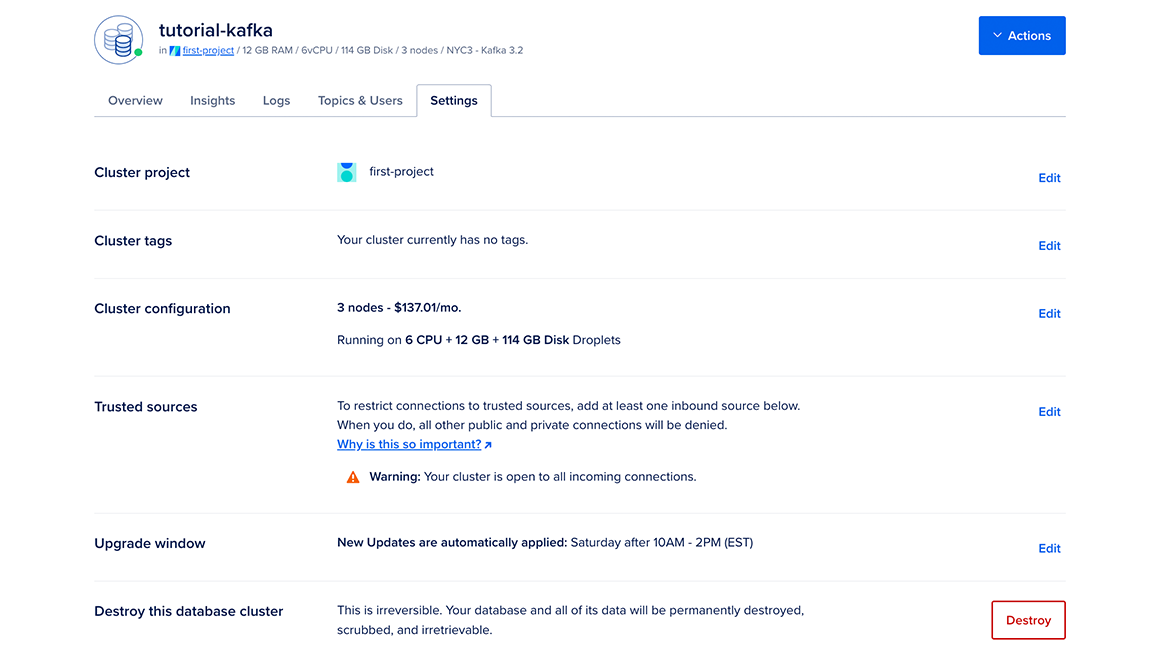

To forward logs from a database cluster in the control panel, go to the Databases page, then select your database cluster. Click the name of the cluster to go to its Overview page, then click the Settings tab.

On the Settings page, in the Log forwarding section, click Edit. Select the service you want to forward logs to.

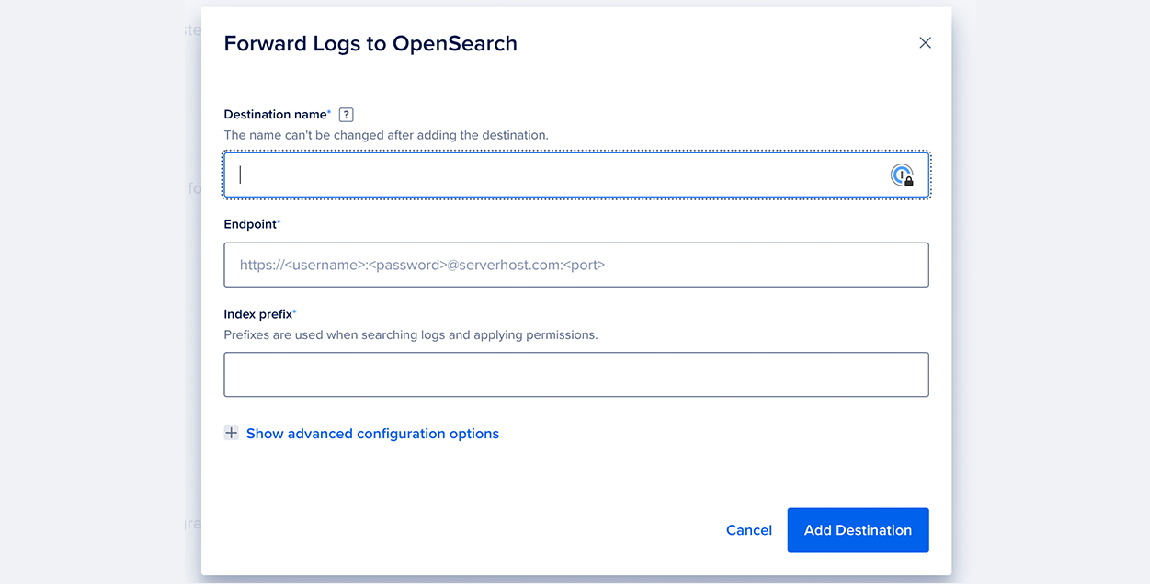

For OpenSearch and Elasticsearch, enter your destination name, endpoint URL, and index prefix. You can also click Show advanced configuration options to edit the log sink’s maximum days to store logs, request timeout (in seconds), and CA certificate for authentication. To confirm your changes, click Add destination.

For Rsyslog, enter your destination name, endpoint URL, endpoint port, and message format (RFC5424, RFC3164, or custom). You can also click Show advanced configuration options to edit the log sink’s structured data, CA certificate, client key, and client certificate. To confirm your changes, click Add destination.

Customize Rsyslog Format

If you choose a custom message format for your log sink to Rsyslog, you can use the following tags in limited Rsyslog style templating (%tag%): HOSTNAME, app-name, msg, msgid, pri, procid, structured-data, timestamp, and timestamp:::date-rfc3339.

For a list of examples, see the official Rsyslog documentation.