How to Import PostgreSQL Databases into DigitalOcean Managed Databases with pg_dump

PostgreSQL is an open source, object-relational database built for extensibility, data integrity, and speed. Its concurrency support makes it fully ACID-compliant, and it supports dynamic loading and catalog-driven operations to let users customize its data types, functions, and more.

If you have a PostgreSQL database that you want to import into DigitalOcean Managed Databases, you need the following:

-

An export of the existing database, which you can get using

pg_dumpor other utilities. -

A PostgreSQL database cluster created in your DigitalOcean account.

-

An existing database in the database cluster to import your data into. You can use the default database or create a new database.

Export an Existing Database

One method of exporting data from an existing PostgreSQL database is using pg_dump, a PostgreSQL database backup utility. pg_dumpall is a similar utility meant for PostgreSQL database clusters.

To use pg_dump, you need specify the connection details (like admin username and database) and redirect the output of the command to save the database dump. The command will look like this:

pg_dump -h <your_host> -U <your_username> -p 25060 -Fc <your_database> > <path/to/your_dump_file.pgsql>

The components of the command are:

-

The

-hflag to specify the IP address or hostname, if using a remote database. -

The

-Uflag to specify the admin user on the existing database. -

The

-pflag to specify a connecting port. Our managed databases require connecting to port25060. -

The

-Fcflags to create the dump file in the custom format, compatible withpg_restore. -

The name of the database to dump.

-

The redirection to save the database dump to a file called

your_dump_file.pgsql.

Learn more in PostgreSQL’s SQL Dump documentation.

The time to export increases with the size of the database, so a large database will take some time. When the export is complete, you’ll be returned to the command prompt or notified by the client you used.

Import a Database

To import the new source database, ensure that you can connect to your target database with psql. Then, you need to find the connection URI for the target database you want to add the existing data into.

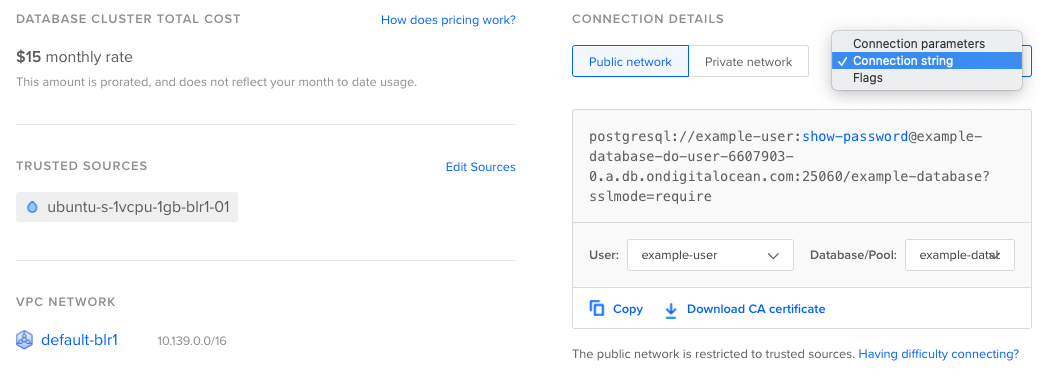

If you want to import to the default target database with the default user, you can use the public network connection string from the cluster’s Overview page, under Connection Details and in the drop-down menu.

If you want to import to a different target database or with a different user, select your desired specifications using the User and Database/Pool drop-down menus below.

Click the blue, highlighted show-password string to reveal your password, then copy the URI.

Once you have the connection URI for the source database and user you want to use, note whether your source database is in custom format or is a text format dump, and then follow the applicable steps below. We recommend exporting dumps in custom format for its compression and ability to restore tables selectively.

Import Data in Custom Format

To import a source database in custom format, use the pg_restore command:

pg_restore -d <your_connection_URI> --jobs 4 <path/to/your_dump_file.pgsql>

The components of the command are:

- The

-dflag to specify the database name. - Your connection URI.

- The

--jobsflag to specify the number of concurrent threads to run the import. A higher number accelerates the process, but requires more CPUs. - The number of threads to run.

- The path to your local source database file.

If the database you’re importing has multiple users, you can add the --no-owner flag to avoid permissions errors. Even without this command, the import will complete, but you may see a number of error messages.

Reference PostgreSQL’s documentation for more information about its Backup and Restore functions.

Import a Text Format Dump

To import a regular text format dump, use the following command:

psql -d <your_connection_URI> < <path/to/your_dump_file.pgsql>

The components of the command are:

- The

-dflag to specify the database name. - Your connection URI.

- The less-than symbol (

<) to input the following file to your target database. - The path to your local source database file.

Reference PostgreSQL’s documentation for more information about its Backup and Restore functions.

After Importing

Once the import is complete, you can update the connection information in any applications using the database to use the new database cluster.

We also recommend running the PostgreSQL-specific ANALYZE command to generate statistical database information. This helps the query planner optimize the execution plan, which increases the speed that the database executes SQL queries. Learn more in the PostgreSQL wiki introduction to VACUUM, ANALYZE, EXPLAIN, and COUNT.