Machines are high-performing computing for scaling AI applications.

ML in a Box is a generic data science stack for machine learning (ML). It includes stable and up-to-date installations of widely used ML and mathematics software, such as:

XGBoost and Scikit-learn provide machine learning algorithms outside of deep learning, such as gradient-boosted decision trees.

ML-in-a-Box also includes a full setup that you can use with Paperspace’s GPUs:

The software stack is built on a standard Paperspace Ubuntu VM template.

For more information on what is included and how to install the stack, read through the ML-in-a-Box GitHub repository.

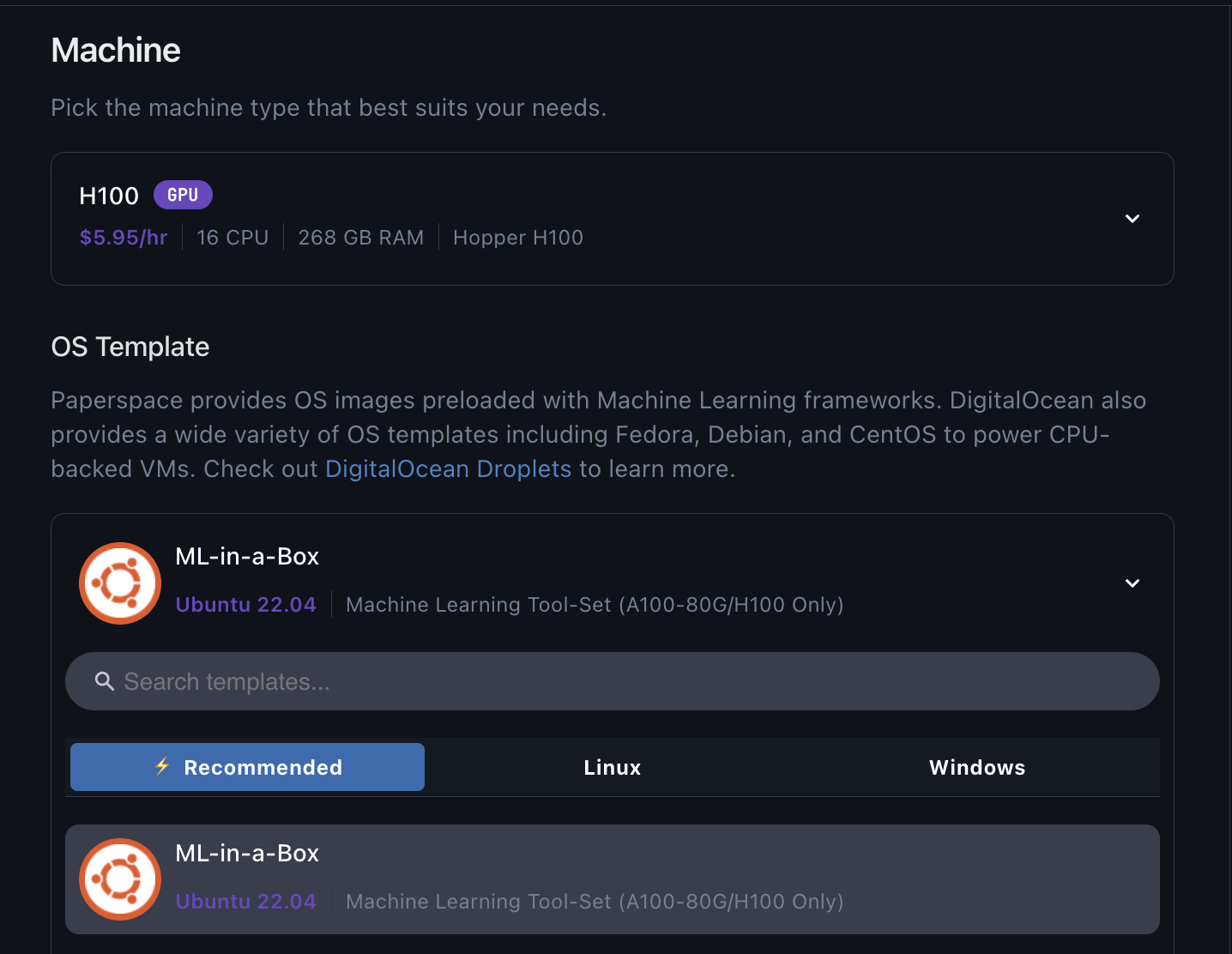

ML-in-a-Box Ubuntu 22.04 and select it.

Since ML-in-a-Box is a terminal / SSH only machine, so you should connect to the machine from your terminal with an SSH command as shown:

ssh paperspace@123.456.789.012

This is user paperspace to the machine’s IP. It uses your SSH key to authenticate. This should bring you to a command prompt in its terminal interface.

On the machine, your home directory is set to /home/paperspace.

The shell is /bin/bash, and your username is paperspace.

Within this, there are various directories, but most are not relevant to running ML as a user.

Common data science commands available in /usr/bin include:

apt awk aws bunzip2 bzip2 cat cc comm crontab curl cut df dialog diff docker echo emacs ffmpeg find free gcc git grep gunzip gzip head java joe jq ln ls make man md5sum mkdir mv nano nohup nvidia-smi openssl ping rm rmdir rsync screen sed ssh sudo tail tar tee tmux top tr uniq unrar unzip vi vim watch wget whoami which xargs xz zip

Several other commands are in /usr/local/bin:

cmake python python3

You can find more commands in /home/paperspace/.local/bin:

accelerate cython deepspeed gdown gradient ipython ipython3 jupyter jupyter-contrib-nbextension jupyter-lab pip pip3 tensorboard wandb

You can find other commands under /usr/local/cuda/bin:

nvcc nvlink

If needed, go to directory listings for the full sets.

Similarly, available Python modules include:

accelerate bitsandbytes boto3 cloudpickle cv2 cython datasets deepspeed diffusers future gdown gradient ipykernel ipywidgets jsonify jupyter_contrib_nbextensions jupyterlab jupyterlab_git matplotlib nltk numpy omegaconf pandas peft PIL safetensors scipy seaborn sentence_transformers skimage sklearn spacy sqlalchemy tabulate tensorflow timm tokenizers torch torchaudio torchvision tqdm transformers wandb xgboost

With these commands and modules, you are able to start using the supplied ML software stack, or to install further items if needed.

You can try out various basic commands to verify that the main libraries included with the machine work.

Start PyTorch and display GPU:

python

Python 3.11.7 (main, Dec 8 2023, 18:56:58) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.cuda.is_available()

True

>>> torch.cuda.get_device_name(0)

'NVIDIA H100 80GB HBM3'

For more information about the environment, run the following command:

>>> python -m torch.utils.collect_env

Start TensorFlow and display GPU:

python

>>> import tensorflow as tf

>>> x = tf.config.list_physical_devices('GPU')

>>> for i in range(len(x)): print(x[i])

PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:1', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:2', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:3', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:4', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:5', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:6', device_type='GPU')

PhysicalDevice(name='/physical_device:GPU:7', device_type='GPU')

>>> tf.test.is_built_with_cuda()

True

Display GPUs directly using nvidia-smi:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA H100 80GB HBM3 On | 00000000:00:05.0 Off | 0 |

| N/A 25C P0 74W / 700W | 155MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA H100 80GB HBM3 On | 00000000:00:06.0 Off | 0 |

| N/A 26C P0 71W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 2 NVIDIA H100 80GB HBM3 On | 00000000:00:07.0 Off | 0 |

| N/A 27C P0 77W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 3 NVIDIA H100 80GB HBM3 On | 00000000:00:08.0 Off | 0 |

| N/A 25C P0 76W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 4 NVIDIA H100 80GB HBM3 On | 00000000:00:09.0 Off | 0 |

| N/A 25C P0 72W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 5 NVIDIA H100 80GB HBM3 On | 00000000:00:0A.0 Off | 0 |

| N/A 27C P0 74W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 6 NVIDIA H100 80GB HBM3 On | 00000000:00:0B.0 Off | 0 |

| N/A 26C P0 74W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 7 NVIDIA H100 80GB HBM3 On | 00000000:00:0C.0 Off | 0 |

| N/A 26C P0 76W / 700W | 2MiB / 81559MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

Since ML-in-a-Box is generic, how you use this stack depends depend on the needs for your project. Some common next steps you could take are the following:

To report any issues with the software or to feedback or requests, see Paperspace Community or contact support.